AI Factories: The Competitive Edge Your Cloud Strategy Can’t Afford to Miss

AI factories are quietly rewriting the rules of business and innovation—turning cloud data into intelligence at a speed and scale we’ve never seen before. In this article, I unpack how these “digital assembly lines” are outpacing traditional approaches, the real-world challenges of integrating them with your existing cloud, and why leaders who master this transformation will define the next era of competitive advantage. Ready to rethink your cloud strategy for the AI age? Dive in.

KK Ong

6/1/20256 min read

Why Your Cloud Data Strategy Needs a Rethink in the New Industrial Revolution

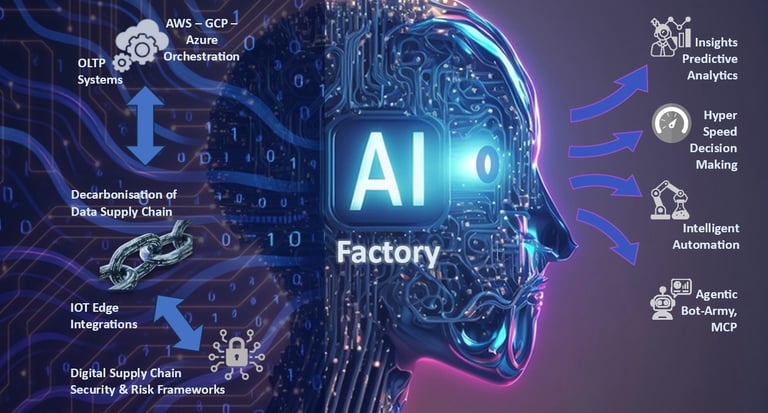

Imagine your AWS, Azure, or GCP cloud not as a finished product, but as a quarry—raw material waiting to be refined. Enter the AI factory: not a smokestack on the skyline, but a digital assembly line where data becomes intelligence, and intelligence becomes your next competitive edge.

The Intelligence Manufacturing Plant

Traditional data centres store and process information. AI factories manufacture intelligence.

They transform raw data into actionable insights by orchestrating the entire AI lifecycle—from ingestion to training, fine-tuning, and high-volume inference. As NVIDIA's CEO Jensen Huang puts it: “In the past, we wrote the software. In the future, AI will write the software.”

This shift is powered by three exponential scaling laws that traditional infrastructure simply cannot handle:

Pretraining scaling: Foundation model training has seen compute requirements increase by a staggering 50 million times in five years.

Post-training scaling: Fine-tuning for specific applications requires 30 times more compute during inference than pretraining.

Long thinking: Advanced AI applications that explore multiple possible responses before selecting the best one can consume up to 100 times more compute than traditional inference.

The Physical World’s Digital Escape Velocity

While AI factories are revolutionising digital intelligence, their most disruptive impact may be in the physical world. The humble Computer-Aided Engineering (CAE) tools that engineers have used since the 1990s have evolved into what we now call Digital Twins—but with a twist that should give pause to any organisation moving at traditional speed.

Today’s AI factories don’t just create one digital twin; they spawn thousands simultaneously. A robot that would take years to develop through physical prototyping can now evolve through 2,000 parallel digital lives overnight. NVIDIA’s Omniverse platform accelerates simulations up to 1,200 times faster than legacy tools, collapsing decades of physical development into months or even weeks.

This isn’t just faster engineering—it’s the complete obliteration of physical constraints on innovation speed. While Australian enterprises and government agencies deliberate in quarterly planning cycles, overseas competitors are running thousands of parallel digital experiments daily, each one generating valuable training data that further accelerates development.

The gap between the digitally accelerated and the traditional isn’t growing linearly—it’s exploding exponentially. Those waiting for the “right time” to invest will find themselves competing against entities that have evolved through thousands of digital generations before their first physical prototype even leaves the drawing board.

What Is an AI Factory?

AI factories are specialised computing environments designed to transform raw data into actionable insights, automating the entire AI lifecycle—from data ingestion and model training to tuning and real-time inference. While traditional data centres process information, AI factories manufacture intelligence—at scale and at speed.

Why now?

Compute requirements for AI have exploded: training today’s foundation models demands 50 million times more power than just five years ago.

Inference—the process of making predictions—now drives most of the cost and complexity.

The market is booming, projected to grow from $28.5B in 2024 to $362B by 2032, driven by three seismic shifts: Inference Economics: 68% of AI operational costs now come from real-time decision-making, not model training. Specialised Hardware: Custom chips like NVIDIA’s Blackwell GPUs (with 80% market share) and Google’s TPU v6e (delivering 58% lower inference costs). Cloud Symbiosis: Major providers now offer "AI-optimised data pathways" (e.g., AWS Glue → SageMaker, Azure Fabric → Synapse ML).

Why Are AI Factories Different?

Purpose-built: Optimised specifically for AI, not generic workloads.

Full-stack: Hardware (such as NVIDIA’s Blackwell GPUs), networking, and software are tightly integrated for maximum efficiency.

Output-focused: The key metric isn’t just uptime or storage, but “AI token throughput”—how much intelligence you can produce, per second, per dollar.

The NVIDIA Advantage: Building the Full-Stack Factory

NVIDIA dominates this space with 70–95% market share in AI accelerators. Their advantage isn’t just superior chips—it’s their integrated approach, where every layer from silicon to software is optimised for AI at scale. Their latest Blackwell platform delivers up to 50 times the output for AI reasoning compared to previous generations, supported by advanced networking technologies and comprehensive software tools. This full-stack approach has made NVIDIA-powered AI factories the go-to solution for enterprises and nations worldwide.

The Data Dilemma: Where AI Factories Meet Your Existing Cloud

Here’s where theory meets practice—and where many AI initiatives stumble.

Most enterprises already have their operational data spread across AWS, Azure, or GCP. These traditional cloud environments weren’t designed for the massive, continuous data flows that AI factories require. As any seasoned data engineer will tell you, this creates a fundamental tension:

The Synchronisation Trap: Real-time tapping of live transactional databases slows down critical business operations. But replicating those databases creates synchronisation inefficiencies and data freshness issues.

The Integration Challenge: AI factories need to seamlessly connect with existing cloud infrastructure without creating new data silos or requiring complete architectural overhauls.

Smart organisations are addressing this through three emerging patterns:

Hybrid Factory Architecture: Maintaining sensitive operational data in existing clouds while building purpose-built AI infrastructure for training and inference. This approach uses specialised data pipelines designed for high-throughput, low-latency connections between environments.

Data Mesh Strategies: Instead of centralising all data, creating domain-oriented, self-serve data products that can feed AI factories without disrupting operational systems.

Intelligent Caching Layers: Implementing sophisticated caching mechanisms that provide AI systems with the data they need without constant pulls from production databases.

The Silent Handshake: Cloud ↔ AI Factory Integration

Forward-thinking enterprises are adopting three strategic approaches:

Directional Data Flows: Moving from traditional batch ETL to real-time CDC streams that feed AI factories and edge inference systems.

Protocol-Level Partnerships: Cloud providers and AI chipmakers are forging deep integrations (e.g., NVIDIA cuDB for direct Parquet ingestion from S3, Google TPU v5 with native BigQuery JDBC acceleration, AWS Nitro for dedicated PCIe lanes).

Schrödinger Architectures: Data pipelines that exist in both cloud and AI factory states simultaneously, enabling synchronisation across global regions without lag.

Suppliers: Who’s Leading—and Who’s Catching Up?

While NVIDIA currently dominates, competition is intensifying. AMD’s MI400 series and Intel’s Gaudi 3 chips are gaining ground. Cloud providers are developing custom silicon—AWS with Trainium, Google with TPUs, and Microsoft with Maia 100.

Google’s TPUs offer better performance per watt for specific workloads and faster time to first token under low concurrency. However, NVIDIA maintains advantages in memory capacity, ecosystem support, and cost efficiency.

For Decision Makers: What Should You Do Now?

For Business Leaders:

AI factories are not a “rip and replace” of your cloud—they’re an advanced layer that leverages your existing data investments.

The winners will be those who bridge operational data with AI factories efficiently, not just those who buy the most GPUs.

For IT and Data Leaders:

Prioritise CDC and hybrid architectures to avoid bogging down production systems.

Evaluate cloud-native AI gateways and caching solutions for seamless integration.

Monitor emerging standards for data interoperability and governance.

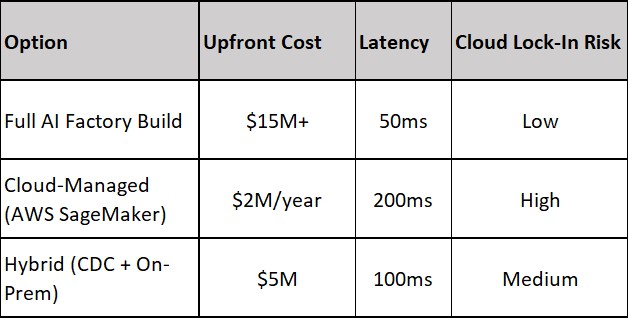

For CTOs: The Hybrid Cost Calculator

Source: AIMultiple 2025 Cloud GPU Benchmark

For Data Engineers: The “3AM Checklist”

This is a metaphor for the essential late-night checks that data engineers rely on—those critical tasks that keep AI and data pipelines running smoothly, even at 3AM when issues tend to surface.

CDC or Bust: Implement log-based CDC using tools like Striim or Debezium.

TCO Analysis: Compare cloud egress fees versus AI factory ROI (average 3:1 savings).

Observability First: Instrument pipelines with OpenTelemetry for model drift detection.

What’s Next?

As AI factories evolve, three trends will shape their trajectory:

Specialised Integration: The future belongs to solutions that bridge AI factories with existing cloud infrastructure, minimising data movement while maximising intelligence extraction.

Energy Efficiency: As compute demands grow, innovations in chip design and cooling systems will be crucial for sustainable AI operations.

Hybrid Governance: Organisations will develop new frameworks that balance the agility needed for AI innovation with the governance required for operational systems.

Final Thought

The AI factory revolution is just beginning. Those who understand not just the technology but the practical challenges of integrating these new capabilities with existing infrastructure will emerge as leaders in this new era.

The question isn’t whether to participate in this transformation, but how to do so in a way that leverages your existing investments while positioning for an AI-powered future.

The future belongs to those who master the flow. Will your business be among them?

References

NVIDIA. (2025, March 18). AI Factories Are Redefining Data Centres, Enabling Next Era of AI. NVIDIA Blog.

Janakiram, M.S.V. (2025, March 23). What Is AI Factory, And Why Is Nvidia Betting On It? Forbes.

AIMultiple Research. (2025, May 23). Top 20 AI Chip Makers: NVIDIA & Its Competitors in 2025.

Trelis Research. (2025, May 14). TPUs vs GPUs: Key Differences for AI Development. Geeky Gadgets.

Wittenberg, N. (2025, January 15). AI Predictions for 2025. Armedia.

Stanford HAI. (2025). The 2025 AI Index Report.

United Nations. (2025, April 3). AI's $4.8 trillion future: UN warns of widening digital divide.

Brainpool AI. (2024, December 3). Challenges and Best Practices for Implementing AI in Cloud Environments.

NVIDIA. (2025, March 18). NVIDIA Blackwell Accelerates Computer-Aided Engineering Software by Orders of Magnitude for Real-Time Digital Twins.

Kiledjian. (2025, April 1). How NVIDIA's Digital Twin Technology Is Transforming AI Industry and Climate Innovation.