Seamless Data Migration - The PM & BA’s Playbook for Complex Digital Transformations

In today’s fast-moving digital landscape, data migration is no longer just a technical task - it’s the backbone of successful upgrades, consolidations, and AI innovation. This article shows why Senior Project Managers and Business Analysts must navigate both technical and stakeholder challenges, highlighting how automated discovery tools can transform migration risks into strategic advantages and help organisations move forward with speed and confidence.

KK Ong

4/19/20256 min read

In today’s era of rapid digital transformation, data migration is no longer just a back-office technicality - it’s become the linchpin for platform upgrades, system consolidations, and cloud transitions.

For Senior Project Managers (PMs) and Business Analysts (BAs), navigating these initiatives in large, matrixed organisations demands a blend of technical rigour, stakeholder alignment, and adaptive execution. In dynamic businesses, data migration is rarely an isolated technical task. As organisations race ahead to build foundations for artificial intelligence (AI), migrations are often linked to broader initiatives, with data being re-engineered in parallel to feed machine learning and large language models. Missteps can stall business for weeks and derail the AI uplift trajectory.

This article distils best practices and real-world insights, with a special focus on how automated discovery tools are revolutionising migration planning and execution.

The Data Migration Imperative

Data migration underpins initiatives like ERP modernisation, legacy system decommissioning, system consolidation, and cloud migration. Yet, complexity multiplies in large enterprises with sprawling data landscapes, strict compliance requirements, diverse stakeholders, and varying maturity levels.

Key Challenges:

Data sprawled across dozens of legacy systems with conflicting schemas

Regulatory constraints (GDPR, HIPAA, industry-specific) requiring granular data governance

Siloed business units with conflicting priorities complicating requirements gathering

Dirty data, cultural and legacy issues causing planners to underestimate the time and resources needed for integrity uplifts and ownership overhauls

The Power of Automated Discovery Tools

Traditionally, discovery meant weeks or months of manual inventory and interviews—a process prone to human error and blind spots. Automated discovery tools have changed the game, complementing expert efforts with rapid, accurate, and comprehensive visibility into your IT and data landscape.

Why Automated Discovery Matters:

Speed: What once took months now takes days or hours

Accuracy: Tools map all applications, servers, and dependencies, minimising the risk of missing critical components

Risk Reduction: By surfacing hidden dependencies and obsolete systems, these tools prevent costly surprises mid-migration

Pillars of a Successful Migration Strategy

1. Pre-Migration Planning: Build on a Solid Foundation

Automated Discovery First

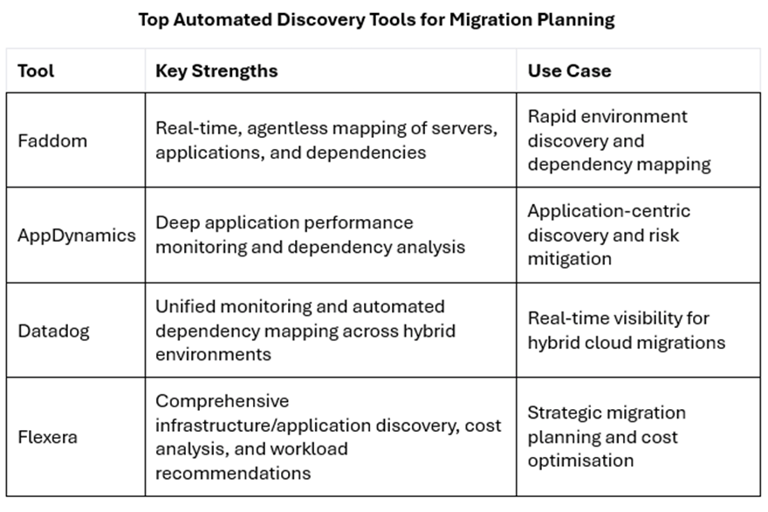

Deploy tools like Faddom, AppDynamics, Datadog, or Flexera to create a comprehensive inventory of your data assets, applications, and their dependencies.

Use these insights to identify redundancies, obsolete systems, and hidden dependencies that could derail migration.

Define Scope with Precision

Not all data are created equal – understand how different parts of the business use data and derivatives to grade clusters by quality and criticality.

Once the current state and pain points are well understood, prioritise business-critical data and processes, leveraging discovery tool outputs to focus on what matters most.

Use risk-weighted assessments—tools like data profiling can quantify quality issues (e.g. 30% duplicate customer records in a retail ERP system). Automated complexity assessments (such as those in Flexera or Infometry’s Metadata Discovery Tool) can estimate migration effort and risk.

Architect a Phased Approach

Let discovery tool dependency maps inform your migration sequencing—migrate low-risk, low-complexity workloads first, based on operating nuances.

Use parallel run validation—maintain legacy systems during initial phases to cross-verify outputs.

Don’t underestimate the value of subject matter experts (SMEs)—a seasoned project manager can engage business veterans to negotiate win-wins, elicit invaluable insights, and understand operational complexity at the coalface.

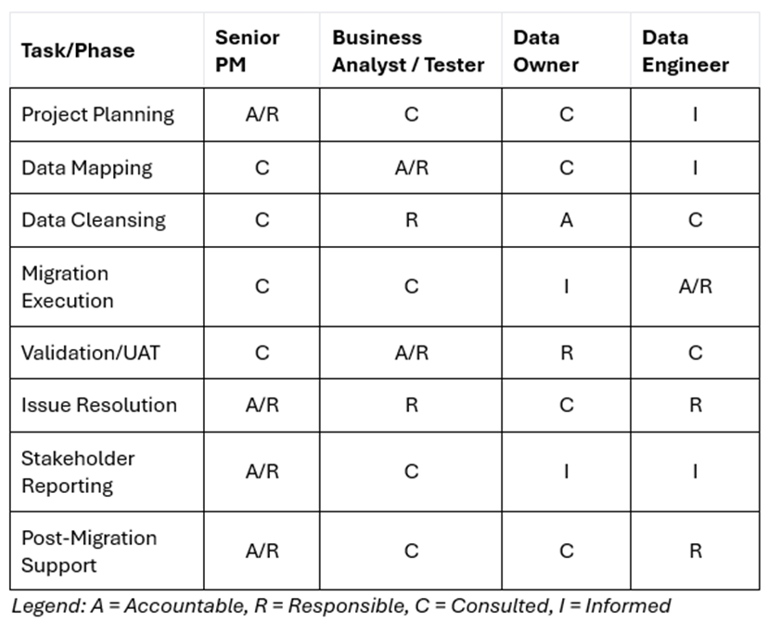

2. Lean Team Structure: Roles in Matrixed Environments

Analysts collaborating with technical specialists and business experts to understand data models

3. Execution: Balance Speed and Accuracy

Data Quality as a Non-Negotiable

Cleanse before migration—resolve inconsistencies (e.g., mismatched country codes) in staging areas. Use automated discovery and metadata tools (e.g., Infometry) to profile and cleanse data, flagging duplicates and inconsistencies.

Automate validation—deploy tools like Great Expectations to flag anomalies in real time.

Agile, Iterative Delivery

Use insights from dependency maps to break migration into manageable sprints and isolate issues.

Maintain parallel runs where possible, using tool-generated reports to cross-validate outputs.

Establish fallback protocols—predefine rollback criteria (e.g., >3% data loss) to avoid cascading failures.

Critical Competencies for Senior PMs and BAs

For Senior PMs

Negotiate SLAs for third-party tools (e.g. cloud storage latency <50ms), integrate discovery tool outputs into risk logs and project plans.

Garner respect and maintain mindshare from all critical stakeholders, keeping key players informed and “in the tent”.

Navigate the politics of data ownership and responsibilities in legacy environments.

Use cost and effort estimates from tools like Flexera for budgeting and contingency planning.

Tailor stakeholder communications—updates for executives (ROI & business risk management) vs. engineers (technical bottlenecks).

For Business Analysts:

Leverage automated mapping and complexity analysis for accurate data mapping and UAT scenarios.

Use tool-generated documentation to streamline requirements gathering and stakeholder workshops.

Connect with business and technical SMEs to inform robust migration strategies. This combined knowledge is invaluable for crafting realistic User Acceptance Testing (UAT) plans that simulate real-world scenarios.

Use process discovery tools like Apromore, Celonis, or Fluxicon, and run business process re-engineering workshops with time-poor stakeholders (often piggy-backing on data migrations for efficiency).

Handle sensitive data or use de-identified data in compliance with privacy and confidentiality requirements.

Post-Migration: Sustaining Success

Monitor performance and data quality with tools like Datadog and AppDynamics.

Archive legacy systems with read-only access, using automated reports to validate completeness.

Document all transformation rules and maintain a knowledge base for ongoing support.

Drive decommissioning strategies in compliance with security and legal requirements.

Conclusion: Turning Migration Into Competitive Advantage

Automated discovery tools can play a pivotal role in transforming data migration from a risky, manual, technical process into a strategic capability uplift. By combining these tools with the ability to connect with time-poor stakeholders, Senior PMs and BAs can deliver migrations that are faster, safer, and underpin business initiatives to lift the level of play—unlocking the full value of digital transformation.

Seamless AI is often underpinned by complex but robust data engineering behind the scenes

By mastering these strategies and harnessing the right automated discovery tools, you’ll position your team for next-level value add—ready to lead your organisation through the next wave of digital transformation.

Additional Section (Due to popular request):

Common Challenges in Data Migration Projects

Here's an overview of the key challenges faced during data migration projects:

1. Data Quality Issues

Inconsistent data formats across source systems requiring standardisation

Duplicate records needing reconciliation and deduplication strategies

Missing or incomplete data fields that must be addressed before migration

Legacy data structures incompatible with new system requirements

2. Compatibility Challenges

Data type mismatches between source and target systems requiring transformation

Character encoding problems, especially with international or special character data

Schema differences necessitating complex mapping and transformation rules

API limitations when extracting from legacy systems with outdated interfaces

3. Performance Bottlenecks

Network bandwidth constraints during large data transfers across environments

Processing limitations when transforming complex datasets with interdependencies

Database performance issues during bulk loading operations

Timeout problems with large transaction volumes requiring batching strategies

4. Business Continuity Concerns

Minimising downtime during cutover periods through careful planning

Maintaining data integrity during transition with verification processes

Supporting parallel operations during phased migrations to reduce business impact

Ensuring critical business functions remain operational throughout migration

5. Governance and Compliance

Meeting data sovereignty requirements, especially in government contexts

Maintaining comprehensive audit trails throughout the migration process

Ensuring proper data classification and handling in the new environment

Managing access controls and permissions to maintain security posture

6. Testing and Validation Challenges

Creating representative test data sets that exercise all system functions

Verifying data completeness and accuracy after migration through reconciliation

Validating business rules and calculations in the new environment

Reconciling financial and critical operational data to ensure consistency

7. Sensitive Data Protection

Implementing data masking and obfuscation for sensitive information in test environments

Establishing role-based access controls to limit exposure of confidential data

Creating synthetic test data that mimics production patterns without using real PII

Developing incident response protocols for potential data exposure scenarios

Implementing comprehensive monitoring and auditing of sensitive data access

8. Change Management and Stakeholder Alignment

Managing stakeholder expectations through transparent communication

Training end users on new data structures and access methods

Documenting data lineage to maintain institutional knowledge

Securing executive support and buy-in for critical decision points during migration

Aligning technical migration activities with business priorities and timelines